It has been a while since I wrote something on this subject, perhaps I'll get back to it, perhaps not. Today's article isn't extensive, not particularly analytical, and is inclined toward a "warning" or, per the subtitle, a public service announcement. This was also a stream-of-consciousness piece, which I sometimes engage in sparsely.

The usage of “AI”, mostly Large Language Models and image, video generation, is now widespread, a continuous stream of what seems to be endless slop if you ask me, and this societal adoption has had many cascading effects, and while I will mention them, most of them are not the focus today.

If you read back older Fourth Option articles and a couple of AI-centered articles in Things Hidden, especially the ones centered around AI and LLMs, you will often find my criticism of OpenAI and Anthropic’s diehard belief in scale. Meaning adding more GPUs and hardware would be everything you need to achieve a human-level of knowledge generalization, models capable of learning and executing tasks like human experts (a somewhat gross simplification).

This scaling strategy was made worse when the current American administration saw the writing on the wall and went all in on backing many circular financing deals. If you are asking, it is simple: the only thing that kept the American and global economy afloat through a cascade effect was data centers and AI investment. Demand from both enterprises (companies) and consumers feeds this cycle, video-generation itself was already a problem.

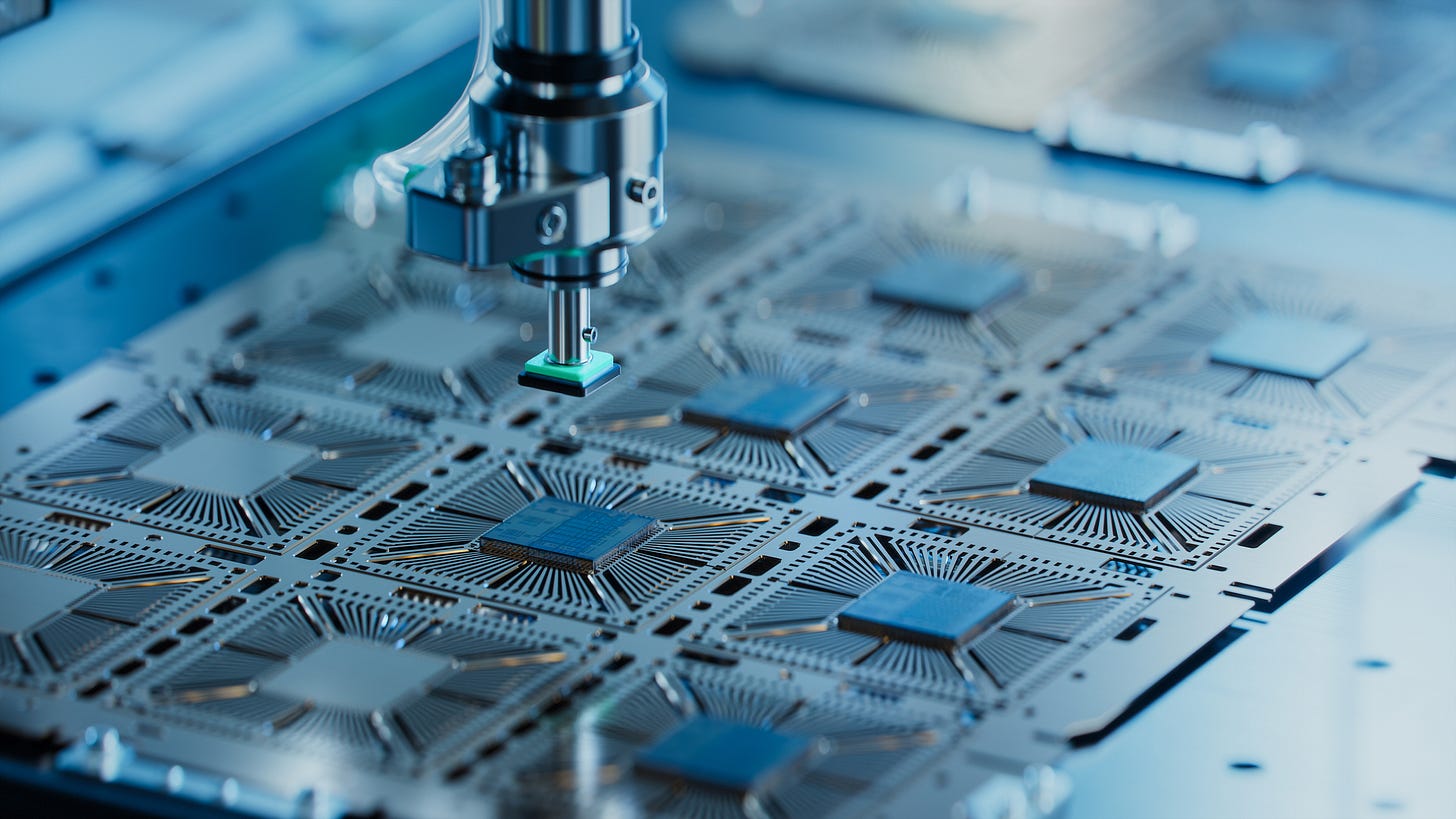

All this global frenzy for data centers with the variables above translates into one thing. Demand for the hardware, the backbone of the infrastructure, would and did explode. In simple terms, data centers are made of server racks.

These racks consist of many components, but an easy way to describe the racks is “a big enclosure for a bunch of very beefy computers”. Everyone knows how critical GPUs are to AI, from training to inference (when you ask something and the AI outputs an answer), but AI also uses CPUs, networking cables, storage, and RAM.

RAM can be described as the computer's fast, temporary, short-term memory of a computer or any modern device. Any apps, software, tool, or process will be somehow using RAM to run, and RAM is CRITICAL to AI. An LLM is used to access data much faster than accessing storage, and faster RAM enables for quicker access and movement between the RAM memory itself and the processor, reducing latency and speeding up inference. Also, in very simplistic terms, you can offload a lot of a big model to the RAM, enabling you to “save” the GPU VRAM, which is always more limited.

Here is the tricky part. Everything uses RAM. VRAM is made of RAM. RAM production is very material-intensive, using a lot of wafers to produce RAM. HBM (high-bandwidth memory, imagine a faster specialized RAM designed specifically for very fast data transfer), and its design is based on stacking many RAM chips and packing them, meaning it uses even more material.

In October, OpenAI signed a deal with two of the biggest RAM producers in the world, Samsung and SK Hynix, two of the three RAM producers that control over 80% of the global market. While the exact terms remain confidential, industry analysts at TrendForce and DRAMeXchange interpret these deals as securing priority allocation for HBM and next-gen DRAM, locking down supply lines that would otherwise feed consumer markets.

Shortly afterward, Micron, the only American producer of cutting-edge memory, announced a strategic pivot that sent shockwaves through the industry. They're dramatically reducing consumer DRAM allocation to focus almost exclusively on HBM and data center products. Both companies issued public statements citing production constraints, which forced data center builders to lock in long-term purchase agreements at premium prices, squeezing consumer supply chains from both ends.

The end result of this RAM apocalypse.

G.Skill issues statement on surging DRAM memory prices and the company totally blames AI

There are dozens of articles on this, and a lot of social media talk

DDR5 is experiencing the fastest and steepest increase in price in its history. High-end DDR5 kits that cost $200-250 in August are now selling for $500-600, higher-end (more GB per stick) are priced even higher, while server-grade RAM has seen comparable spikes that cascade directly into AI rack costs. A high-end consumer PC is now roughly $800-1,500 more expensive than it was in early October, with memory and storage accounting for the vast majority of that jump. Both laptops and smartphones will now be shipped with lower RAM.

GPUs will both get more expensive, and there is a rumor, which I would classify as an actual forecast, because that is the likely outcome, production of consumer-grade GPUs will be cut by up to 40% because of RAM pricing and difficult sourcing. Nvidia makes thousands of dollars in profit for each AI-focused GPU it sells, contrary to the few hundred dollars per consumer GPU.

In line with my 2023/2024 forecasting that, in some way, normal people would be priced out of compute, but now not only will most people end up being priced out of compute, but also from owning hardware itself. And then you can push every single person to the cloud. You will own nothing and be happy. So, consoles, phones, and PCs will be a lot more expensive in the next 2 years.

And another side-effect of all of this, given how intensive storing AI-video and all this data is how expensive storage is becoming. Kingston sounds the SSD pricing alarm as the company has seen a 246% increase in NAND wafer prices, with the biggest increase ‘within the last 60 days’. While everything here has a severely negative impact, storage shortage and absurd price spike are the most significant, because how else will you save anything you want ? It will also affect the pricing of cloud storage.

With all this information and context at hand, here is where the PSA comes in. If you want, or “worse yet” need a computer or laptop to work, you should buy it right now. If you want to upgrade your smartphone, if you want a console to play games, or a new tablet, you should buy it right now. The average increase in price will be around 20%, but I expect higher increases.

In the US, there are a lot of pre-built computers deals going on (computers that come already build, and you don’t get to choose the hardware), and laptop deals are also a good option in case you don’t need a desktop. Sadly, if you are building a computer, there is no way around RAM. Slower RAM is expensive, fast RAM is absurdly expensive, GPUs have a stable price for now, but will get a lot more expensive, and storage is getting more and more expensive.

A bitter truth

While not wanting to delve into the absolutely corrupt financing and deals going on among all the Western AI players, these dynamics have profound long-term problems. First, the self-serving nature of Sam Altman promising things he can’t know he can keep and pricing consumers out of compute, while facing increasing significant pressure from its Chinese competitor, whose realization is setting in that he likely can’t beat in research. Altman’s backup plan is pricing everyone out and become one of the world’s largest AI cloud providers.

The second is merely history repeating itself. As I forecasted continuously through the last decade, China has made massive strides on multiple fronts, achieving parity and often surpassing the West in specific markets, with EVs, batteries, and drones being the main ones lately. China has been pursuing advanced tech independence for years, and in the last 3, pushed by dubious, moronic Western international policy, it has made fast advancements.

Within 5 to 10 years, the same that occurred with solar panels, electric vehicles, battery technology, and drones will occur with RAM. China always does this, it reverse engineers something, achieves parity, dumps heavy capital investment, achieves economies of scale, and basically wipes out the competition by providing quality products for much lower prices than the competition.

Repeating myself, consider buying any electronics you may need, especially for work right now, because I would forecast market stabilization may occur only around 2028. Even with a crash, prices won’t crater as much as people think, because a data center is an infrastructure service, you can merely shift its use from serving Language Models and creating AI-slop towards other digital services.

The difference this time is we’re not just ceding a consumer market to them, we’re potentially handing China a critical national security component because a handful of Silicon Valley cultists believed their own scaling mythos and thought the Chinese wouldn’t exploit. Remember my analysis over time. China has exploited the entirety of the pandemic and post-pandemic dynamics as a very refined 6h gen warfare, hybrid weapon. This may be the most expensive unforced error in recent memory.

Thank you for your support, and see you soon !

Before I send my yearly Christmas e-mail, I will likely send another article, shorter in nature, a bit of gloating, but it is remarkable science being done. Herpes virus and misfolded proteins =).

I wish everyone a great weekend ahead.

Thanks. As just a guy on a small farm raising kids you have been just super. Ill make more money and send you some someday i hope . If i could easily dash you some coin without compkexity , like out my pocket into your i would do it in a heart beat.

Not being in these tech fields the impacts of the markets and activity are somewhat obscure to me. So thank you for bringing attentiin to them . Honestly i am praying to the Sun to save us from ourselves. Seems some kind of major crisis is in the air. Governments are making up narratives to shift into authoritarian positions around the world, most noticeable amongst the west alliance. And tech is being exploited to mobilise political activities. Cant its because of BRICS or if BRICS was a timely response to the growing problem, but it surely plays a part in the global picture.

How can population not be an issue ? I think the simple calculous puts the consumer as essential to wealth, but from a perspective of power robots may be a sufficient surrogate , if only still too risky.

So it really looks to me like all roads lead to population reduction. Its just a matter of optimum timing. Critical data centre infrastructure seems very much like an end game sort of thing. And the players may align across national security boundaries , or not.

In the last few years i have found myself trying to understand brutality better. As its brutality that underpins our societies.

When faced with this idea of getting more tech or fail to take advantage before the inflation apocalypse. Fuck i font even know how an urban city dweller apptoaches that. In my situation i am considering getting panels and hours of batteries. Small farms are under pressure as supply chain issues fir tractor parts are issues. But bigger factory on wheels type of scale with robotics and gps and such for cash cropping is moving in.

Seeing factory farming and appreciating the farming of livestock , it seems that those in power look at much of labor in the same way. Redundancy , or low value to to market sutplus means a cull. Culls are a reset that saves loosing on marginal costs and inputs. Those that make the decisions and pull the strings tend to work at scales that dont see the life any more.