A few days ago a tweet going viral suddenly popped up in my timeline, and while I came to despise the current administration and algorithmic changes on Twitter, this one time I was glad it worked “as intended”. In case you don’t know, the current owner of Twitter is “big mad” with Substack and actively engaging in platform manipulation a la Jack and old Twitter to disrupt any and everything to do with Substack, not the point here.

The viral tweet in question.

Laura is not your average random Twitter “influencer”, but the editor-in-chief of Scientific American arguably one of the most “respected” publications in the English language. The following are excerpts from the linked article.

Conspiracy Theories Can Be Undermined with These Strategies, New Analysis Shows

A new review finds that only some methods to counteract conspiracy beliefs are effective. Here’s what works and what doesn’t

When someone falls down a conspiracy rabbit hole, there are very few proved ways to pull them out, according to a new analysis.

The study is a review of research on attempts to counteract conspiratorial thinking, and it finds that common strategies that involve counterarguments and fact-checking largely fail to change people’s beliefs. The most promising ways to combat conspiratorial thinking seem to involve prevention, either warning people ahead of time about a particular conspiracy theory or explicitly teaching them how to spot shoddy evidence.

“We’re still in the early days, unfortunately, of having a silver bullet that will tackle misinformation as a whole,” says Cian O’Mahony, a doctoral student in psychology at University College Cork in Ireland, who led the study.It was published today in the journal PLOS ONE.

Counteracting conspiracy beliefs is important because beliefs in conspiracies can encourage people to act in harmful ways, says Kathleen Hall Jamieson, a professor of communication and director of the Annenberg Public Policy Center at the University of Pennsylvania, who was not involved in the new review.The people who stormed the U.S. Capitol on January 6, 2021, believed the 2020 presidential election had been stolen, for example.And believers in COVID vaccine conspiracies put themselves at risk from the disease by refusing to get vaccinated. But the field is so young that trying to compare individual studies is fraught, Jamieson says.

“There are so many different definitions and specifications of what is a conspiracy belief and a conspiracy mindset that it’s very difficult to aggregate this data in any way that permits generalization,” she says. The comparisons in the new review are a suggestive starting point, Jamieson adds, but shouldn’t be seen as the last word on conspiracy interventions.

Studies often blur the lines between conspiracy theory, disinformation and misinformation, O’Mahony says. Misinformation is simply inaccurate information, while disinformation is deliberately misleading. Conspiracy beliefs, as O’Mahony and his colleagues define them, include any beliefs that encompass malicious actors engaging in a secret plot that explains an important event. Such beliefs are not necessarily false—real conspiracies do happen—but erroneous conspiracy theories abound, from the idea that themoon landing was faked to the notion that COVID vaccines are causing mass death that authorities are covering up.

For individuals interested in challenging conspiracy thinking, the authors of the new review provide some tips:

Don’t appeal to emotion. The research suggests that emotional strategies don’t work to budge belief.

Don’t get sucked into factual arguments. Debates over the facts of a conspiracy theory or the consequences of believing in a particular conspiracy also fail to make much difference, the authors found.

Focus on prevention. The best strategies seem to involve helping people recognize unreliable information and untrustworthy sources before they’re exposed to a specific belief.

Support education and analysis. Putting people into an analytic mindset and explicitly teaching them how to evaluate information appears most protective against conspiracy rabbit holes.

One of the organizations responsible for catapulting the interest and use of prebunking is none other than Alphabet, Google’s parent company.

In the days before the 2020 election, social media platforms began experimenting with the idea of “pre-bunking”: pre-emptively debunking misinformation or conspiracy theories by telling people what to watch out for.

Now, researchers say there’s evidence that the tactic can work — with some help from Homer Simpson and other well-known fictional characters from pop culture.

In a study published Wednesday, social scientists from Cambridge University and Google reported on experiments in which they showed 90-second cartoons to people in a lab setting and as advertisements on YouTube, explaining in simple, nonpartisan language some of the most common manipulation techniques.

The cartoons succeeded in raising people’s awareness about common misinformation tactics such as scapegoating and creating a false choice, at least for a short time, they found.

I have written about Memes before, and half of the substack linked is explaining the concept from the perspective of warfare and the impact it had on society multiple times in the last years. Google recently engaged in the biggest “prebunking” experiment to date on social media.

“Two videos, each prebunking a different narrative, ran across YouTube, Facebook, Twitter and TikTok in each of the three countries. One video focused on narratives scapegoating Ukrainian refugees for the escalating cost of living while the other highlighted fearmongering over Ukrainian refugees’ purported violent and dangerous nature,” Google explained.

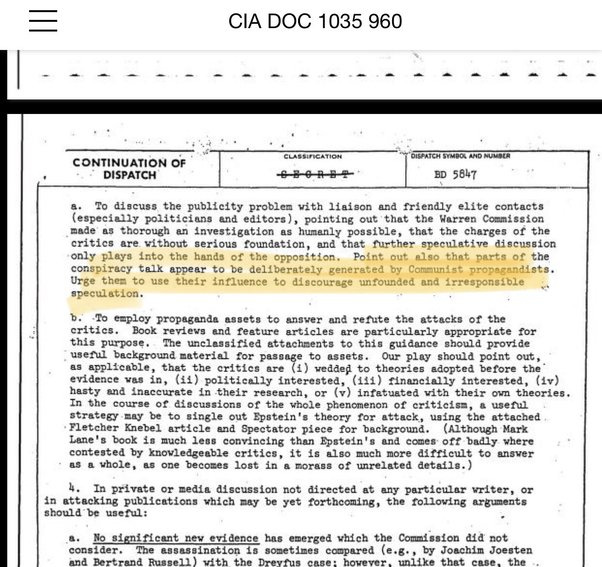

While structurally “weird”, I prefer introducing the main part of whatever I am going to discuss, rather than the explanations and previous evidence. From a superficial glance and on paper “prebunking” seems to be a promising tool for combating various forms of manipulation in the age of network effects. Following the same linguistic pattern as “conspiracy theory”, a term used and popularized (propagandized) by the CIA in regards to combating among other types of information, communist propaganda later adapted to use against anything the Intelligence services perceived as a threat.

Prebunking has a similar covert premise as many other attempts at linguistic manipulation. What is linguistic manipulation ? It is the use of language to influence, change, mold, and control someone’s thoughts, beliefs, and behavior, at its core it is one of the pillars of cognitive warfare. By applying specific linguistic tools such as word frequency, syntax changes, and especially semantic shift (changing the meaning of words). Language is an absurdly powerful tool (as evidenced by my pen name), and changing the meaning of words and terms is one of the most effective long-term tools to shape and control people and especially culture, creating a self-feeding loop of change.

By applying these linguistic “tricks” any organization or interested party molds how the average person or even entire fields see and employ certain terms such as prebunking, a concept, and term that from my perspective is akin to a cognitive warfare tactic, pre-emptive propaganda. The argument is fighting disinformation which is a known yet superficial aspect of Cognitive Warfare. The question then arises: what is classified as misinformation? Who decides what is misinformation? Government agencies, bureaucrats, organizations, and powerful individuals with a vested interest in certain narratives have the power to decide what information is considered misinformation. As a result, prebunking may serve as a tool for these entities to shape and control the flow of information to serve their specific goals.

One of the key dangers of prebunking is that it could be used as a tool for censorship and the suppression of dissenting opinions. By labeling certain ideas or viewpoints as "misinformation," those in power can use prebunking as a means to discredit and silence opposition. Another concern with prebunking is that it relies on the subjective judgments of those in power to decide what information is true or false. This creates the potential for bias and manipulation, as those with a vested interest in a particular narrative or agenda can use prebunking to shape public opinion to their advantage.

By pre-emptively attacking opposing views, those in power can create a sense of certainty and conformity around a particular narrative or belief system. This can lead to a dangerous form of groupthink, where dissenting opinions are not only silenced but actively discouraged and stigmatized.

One question you may have asked throughout this piece is “Does this somewhat relate to the pandemic we just went through ?”. Now I want you to go on Google Scholar, and use the search term “prebunk covid-19”, you may also use the prebunking search query in most publications involving psychology, do you know what psychologists and psychological operations units were heavily involved in the last 3 years ? Nudging.

When we examine the concept closely, it becomes clear that prebunking is simply another facet of the wider field of nudging, nudging itself belonging to the same class as psychological operations and both being rather “primitive” forms of cognitive warfare. Prebunking fits almost perfectly with the concept of nudging, since both seek to influence people’s behavior, thinking, but the former is done preemptively.

I may rewrite the Memetic substack linked above, in which half of the theme was Twitter, and solely focus on the Memetics/Mimetics and go further into Cognitive Warfare. The PLA (Chinese Army) themselves have been vocal on this front recently. All these topics are incredibly important for the near future, and have been important since 2020, but as the old saying goes, better later than never.

I seldom, if ever edit any of my substacks, but shortly after publishing it (therefore sending the e-mail) a friend and former co-worker sent me this.

The role of psychological warfare in the battle for Ukraine

Social media disinformation and manipulation are causing confusion, fueling hostilities, and amplifying atrocities around the world

Preempting propaganda

By design, mis- and disinformation are more infectious and incendiary than factual information, which makes them particularly useful in wartime.

Preempting Russian propaganda affords a strategic advantage, as well as a psychological one, Singer said—it puts the Kremlin on its back foot, forcing it to react instead of making the first move and controlling the storyline.

Social media platforms are also limiting the flow of Russian disinformation more aggressively than they have in the past, Nguyen said. Twitter is adding labels to Russian state-sponsored media, while Meta is demoting such posts. Reddit is making it harder to find the subreddit r/Russia, and TikTok is limiting livestreams and uploads from Russia.

At the individual level, the same strategies that work for avoiding misinformation on other topics can help protect against psychological warfare, too. Singer and his colleagues call this “cyber citizenship”—a combination of digital literacy, responsible behavior, and awareness of the threat of online manipulation.

You can find why I have avoided writing about Cognitive Warfare in the comment section of this substack, or if you want to put a puzzle together, in the substack I mentioned previously and linked above.

If you chose to support this work in any form, you have my gratitude. Helps me build this endeavor.